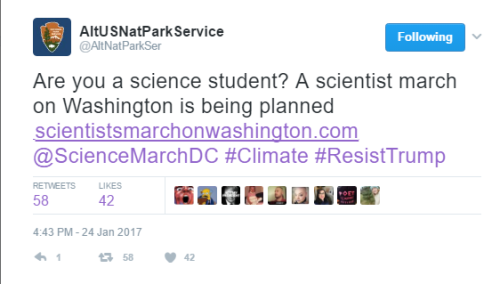

GUYS Https://twitter.com/AltNatParkSer/status/824054953404669953 Http://www.scientistsmarchonwashington.com/

GUYS https://twitter.com/AltNatParkSer/status/824054953404669953 http://www.scientistsmarchonwashington.com/ THE NATIONAL PARK SERVICE IS IN OPEN REBELLION

More Posts from Smparticle2 and Others

Webb 101: 10 Facts about the James Webb Space Telescope

Did you know…?

1. Our upcoming James Webb Space Telescope will act like a powerful time machine – because it will capture light that’s been traveling across space for as long as 13.5 billion years, when the first stars and galaxies were formed out of the darkness of the early universe.

2. Webb will be able to see infrared light. This is light that is just outside the visible spectrum, and just outside of what we can see with our human eyes.

3. Webb’s unprecedented sensitivity to infrared light will help astronomers to compare the faintest, earliest galaxies to today’s grand spirals and ellipticals, helping us to understand how galaxies assemble over billions of years.

Hubble’s infrared look at the Horsehead Nebula. Credit: NASA/ESA/Hubble Heritage Team

4. Webb will be able to see right through and into massive clouds of dust that are opaque to visible-light observatories like the Hubble Space Telescope. Inside those clouds are where stars and planetary systems are born.

5. In addition to seeing things inside our own solar system, Webb will tell us more about the atmospheres of planets orbiting other stars, and perhaps even find the building blocks of life elsewhere in the universe.

Credit: Northrop Grumman

6. Webb will orbit the Sun a million miles away from Earth, at the place called the second Lagrange point. (L2 is four times further away than the moon!)

7. To preserve Webb’s heat sensitive vision, it has a ‘sunshield’ that’s the size of a tennis court; it gives the telescope the equivalent of SPF protection of 1 million! The sunshield also reduces the temperature between the hot and cold side of the spacecraft by almost 600 degrees Fahrenheit.

8. Webb’s 18-segment primary mirror is over 6 times bigger in area than Hubble’s and will be ~100x more powerful. (How big is it? 6.5 meters in diameter.)

9. Webb’s 18 primary mirror segments can each be individually adjusted to work as one massive mirror. They’re covered with a golf ball’s worth of gold, which optimizes them for reflecting infrared light (the coating is so thin that a human hair is 1,000 times thicker!).

10. Webb will be so sensitive, it could detect the heat signature of a bumblebee at the distance of the moon, and can see details the size of a US penny at the distance of about 40 km.

BONUS! Over 1,200 scientists, engineers and technicians from 14 countries (and more than 27 U.S. states) have taken part in designing and building Webb. The entire project is a joint mission between NASA and the European and Canadian Space Agencies. The telescope part of the observatory was assembled in the world’s largest cleanroom at our Goddard Space Flight Center in Maryland.

Webb is currently being tested at our Johnson Space Flight Center in Houston, TX.

Afterwards, the telescope will travel to Northrop Grumman to be mated with the spacecraft and undergo final testing. Once complete, Webb will be packed up and be transported via boat to its launch site in French Guiana, where a European Space Agency Ariane 5 rocket will take it into space.

Learn more about the James Webb Space Telescope HERE, or follow the mission on Facebook, Twitter and Instagram.

Make sure to follow us on Tumblr for your regular dose of space: http://nasa.tumblr.com.

Finding Your Way Around in an Uncertain World

Suppose you woke up in your bedroom with the lights off and wanted to get out. While heading toward the door with your arms out, you would predict the distance to the door based on your memory of your bedroom and the steps you have already made. If you touch a wall or furniture, you would refine the prediction. This is an example of how important it is to supplement limited sensory input with your own actions to grasp the situation. How the brain comprehends such a complex cognitive function is an important topic of neuroscience.

Dealing with limited sensory input is also a ubiquitous issue in engineering. A car navigation system, for example, can predict the current position of the car based on the rotation of the wheels even when a GPS signal is missing or distorted in a tunnel or under skyscrapers. As soon as the clean GPS signal becomes available, the navigation system refines and updates its position estimate. Such iteration of prediction and update is described by a theory called “dynamic Bayesian inference.”

In a collaboration of the Neural Computation Unit and the Optical Neuroimaging Unit at the Okinawa Institute of Science and Technology Graduate University (OIST), Dr. Akihiro Funamizu, Prof. Bernd Kuhn, and Prof. Kenji Doya analyzed the brain activity of mice approaching a target under interrupted sensory inputs. This research is supported by the MEXT Kakenhi Project on “Prediction and Decision Making” and the results were published online in Nature Neuroscience on September 19th, 2016.

The team performed surgeries in which a small hole was made in the skulls of mice and a glass cover slip was implanted onto each of their brains over the parietal cortex. Additionally, a small metal headplate was attached in order to keep the head still under a microscope. The cover slip acted as a window through which researchers could record the activities of hundreds of neurons using a calcium-sensitive fluorescent protein that was specifically expressed in neurons in the cerebral cortex. Upon excitation of a neuron, calcium flows into the cell, which causes a change in fluorescence of the protein. The team used a method called two-photon microscopy to monitor the change in fluorescence from the neurons at different depths of the cortical circuit (Figure 1).

(Figure 1: Parietal Cortex. A depiction of the location of the parietal cortex in a mouse brain can be seen on the left. On the right, neurons in the parietal cortex are imaged using two-photon microscopy)

The research team built a virtual reality system in which a mouse can be made to believe it was walking around freely, but in reality, it was fixed under a microscope. This system included an air-floated Styrofoam ball on which the mouse can walk and a sound system that can emit sounds to simulate movement towards or past a sound source (Figure 2).

(Figure 2: Acoustic Virtual Reality System. Twelve speakers are placed around the mouse. The speakers generate sound based on the movement of the mouse running on the spherical treadmill (left). When the mouse reaches the virtual sound source it will get a droplet of sugar water as a reward)

An experimental trial starts with a sound source simulating a distance from 67 to 134 cm in front of and 25 cm to the left of the mouse. As the mouse steps forward and rotates the ball, the sound is adjusted to mimic the mouse approaching the source by increasing the volume and shifting in direction. When the mouse reaches just by the side of the sound source, drops of sugar water come out from a tube in front of the mouse as a reward for reaching the goal. After the mice learn that they will be rewarded at the goal position, they increase licking the tube as they come closer to the goal position, in expectation of the sugar water.

The team then tested what happens if the sound is removed for certain simulated distances in segments of about 20 cm. Even when the sound is not given, the mice increase licking as they came closer to the goal position in anticipation of the reward (Figure 3). This means that the mice predicted the goal distance based on their own movement, just like the dynamic Bayesian filter of a car navigation system predicts a car’s location by rotation of tires in a tunnel. Many neurons changed their activities depending on the distance to the target, and interestingly, many of them maintained their activities even when the sound was turned off. Additionally, when the team injects a drug that suppresses neural activities in a region of the mice’s brains, called the parietal cortex they find that the mice did not increase licking when the sound is omitted. This suggests that the parietal cortex plays a role in predicting the goal position.

(Figure 3: Estimation of the goal distance without sound. Mice are eager to find the virtual sound source to get the sugar water reward. When the mice get closer to the goal, they increase licking in expectation of the sugar water reward. They increased licking when the sound is on but also when the sound is omitted. This result suggests that mice estimate the goal distance by taking their own movement into account)

In order to further explore what the activity of these neurons represents, the team applied a probabilistic neural decoding method. Each neuron is observed for over 150 trials of the experiment and its probability of becoming active at different distances to the goal could be identified. This method allowed the team to estimate each mouse’s distance to the goal from the recorded activities of about 50 neurons at each moment. Remarkably, the neurons in the parietal cortex predict the change in the goal distance due to the mouse’s movement even in the segments where sound feedback was omitted (Figure 4). When the sound was given, the predicted distance from the sound became more accurate. These results show that the parietal cortex predicts the distance to the goal due to the mouse’s own movements even when sensory inputs are missing and updates the prediction when sensory inputs are available, in the same form as dynamic Bayesian inference.

(Figure 4: Distance estimation in the parietal cortex utilizes dynamic Bayesian inference. Probabilistic neural decoding allows for the estimation of the goal distance from neuronal activity imaged from the parietal cortex. Neurons could predict the goal distance even during sound omissions. The prediction became more accurate when sound was given. These results suggest that the parietal cortex predicts the goal distance from movement and updates the prediction with sensory inputs, in the same way as dynamic Bayesian inference)

The hypothesis that the neural circuit of the cerebral cortex realizes dynamic Bayesian inference has been proposed before, but this is the first experimental evidence showing that a region of the cerebral cortex realizes dynamic Bayesian inference using action information. In dynamic Bayesian inference, the brain predicts the present state of the world based on past sensory inputs and motor actions. “This may be the basic form of mental simulation,” Prof. Doya says. Mental simulation is the fundamental process for action planning, decision making, thought and language. Prof. Doya’s team has also shown that a neural circuit including the parietal cortex was activated when human subjects performed mental simulation in a functional MRI scanner. The research team aims to further analyze those data to obtain the whole picture of the mechanism of mental simulation.

Understanding the neural mechanism of mental simulation gives an answer to the fundamental question of “How are thoughts formed?” It should also contribute to our understanding of the causes of psychiatric disorders caused by flawed mental simulation, such as schizophrenia, depression, and autism. Moreover, by understanding the computational mechanisms of the brain, it may become possible to design robots and programs that think like the brain does. This research contributes to the overall understanding of how the brain allows us to function.

Entering the house owned by a friend working in the private sector, the grad student anxiously reassesses many of his life choices.

L.M. Montgomery, Anne of Green Gables (via books-n-quotes)

It’s been my experience that you can nearly always enjoy things if you make up your mind firmly that you will.

Engineers build world’s lightest mechanical watch thanks to graphene

An ultralight high-performance mechanical watch made with graphene is unveiled today in Geneva at the Salon International De La Haute Horlogerie thanks to a unique collaboration.

The University of Manchester has collaborated with watchmaking brand Richard Mille and McLaren F1 to create world’s lightest mechanical chronograph by pairing leading graphene research with precision engineering.

The RM 50-03 watch was made using a unique composite incorporating graphene to manufacture a strong but lightweight new case to house the delicate watch mechanism. The graphene composite known as Graph TPT weighs less than previous similar materials used in watchmaking.

Graphene is the world’s first two-dimensional material at just one-atom thick. It was first isolated at The University of Manchester in 2004 and has the potential to revolutionise a large number of applications including, high-performance composites for the automotive and aerospace industries, as well as flexible, bendable mobile phones and tablets and next-generation energy storage.

Read more.

Grading a slew of mediocre final papers, the grad student watches his months of arduous teaching bear little fruit.

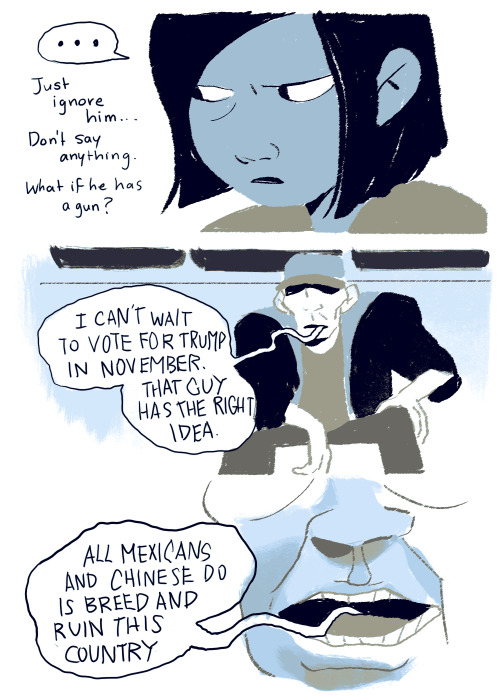

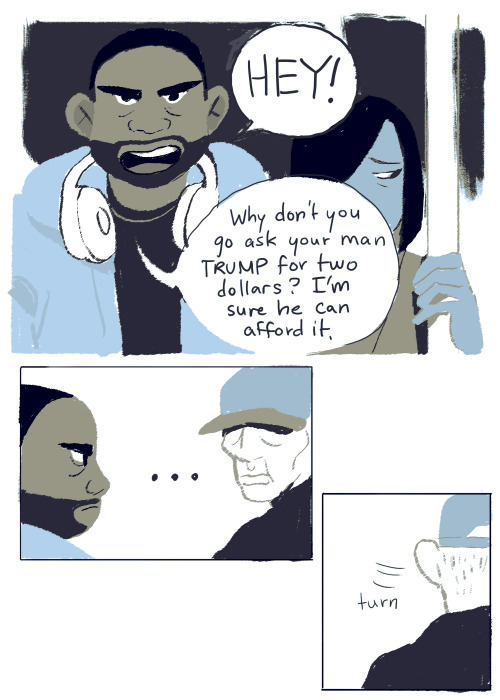

It’s been an emotional week. I wanted to share this encounter I had with a very hateful man on the Pittsburgh bus because it reminds me that there are brave people in this world. Let’s all do everything we can to stand up for each other.

In California’s Salinas Valley, known as the “Salad Bowl of the World,” a push is underway to expand agriculture’s adoption of technology. Special correspondent Cat Wise reports on how such innovation is providing new opportunities for the Valley’s largely Hispanic population. Watch her full piece here: http://to.pbs.org/2gLmEga

-

luminoussphereofplasma reblogged this · 10 months ago

luminoussphereofplasma reblogged this · 10 months ago -

christmas-connections liked this · 1 year ago

christmas-connections liked this · 1 year ago -

edirflavday liked this · 1 year ago

edirflavday liked this · 1 year ago -

maximanimus liked this · 1 year ago

maximanimus liked this · 1 year ago -

pizzaheathen reblogged this · 1 year ago

pizzaheathen reblogged this · 1 year ago -

kingwenish liked this · 1 year ago

kingwenish liked this · 1 year ago -

realnam3 reblogged this · 2 years ago

realnam3 reblogged this · 2 years ago -

bieber-blackandwhite liked this · 2 years ago

bieber-blackandwhite liked this · 2 years ago -

wonderer125blog liked this · 2 years ago

wonderer125blog liked this · 2 years ago -

redpanda411 liked this · 2 years ago

redpanda411 liked this · 2 years ago -

ikemenprincessnaga liked this · 2 years ago

ikemenprincessnaga liked this · 2 years ago -

cererenio liked this · 2 years ago

cererenio liked this · 2 years ago -

naey24-sex-92nes liked this · 2 years ago

naey24-sex-92nes liked this · 2 years ago -

sue-loveseex126033-blog liked this · 3 years ago

sue-loveseex126033-blog liked this · 3 years ago -

cut-the-rope-birthday-cake liked this · 3 years ago

cut-the-rope-birthday-cake liked this · 3 years ago -

vittoosworld liked this · 3 years ago

vittoosworld liked this · 3 years ago