Writto - Goal: Forward

More Posts from Writto and Others

when i saw and heard chaoscontrolled123’s wonderful post about the 600 years old butt song from hell i just knew that i had to bring the piece to life

i present to you, the butt song from hell, with lyrics, so you can even sing along if you want:

butt song from hell

this is the butt song from hell

we sing from our asses while burning in purgatory

the butt song from hell

the butt song from hell

butts

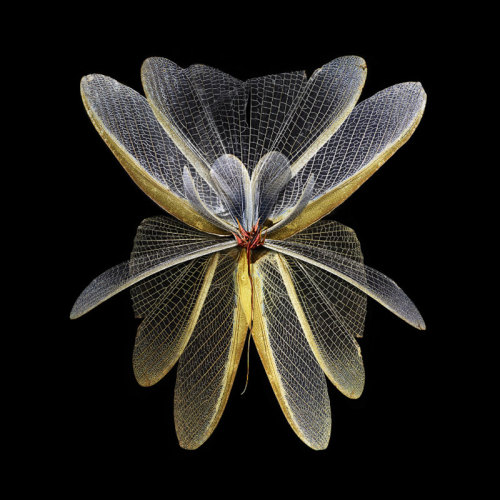

Wings of desire, Seb Janiak

Wonderful Mosaics color by Artist Erin Hanson

Erin Hanson transforms landscapes into abstract color mosaics using an impasto application of paint, where thick layers of paint create almost sculptural forms on the canvas. She tries to use as few strokes as possible without lamination, a process that has been called “open Impressionism. ”

source:library and chambre237

Image-To-Image Demo

Set of interactive browser-based machine learning experiments put together by Christopher Hesse turns doodles into images with various neural network trained image data. For example, in ‘edges2cats’, your simple doodle will be recreated with image data from a dataset of cat pictures:

Recently, I made a Tensorflow port of pix2pix by Isola et al., covered in the article Image-to-Image Translation in Tensorflow. I’ve taken a few pre-trained models and made an interactive web thing for trying them out. Chrome is recommended.The pix2pix model works by training on pairs of images such as building facade labels to building facades, and then attempts to generate the corresponding output image from any input image you give it. The idea is straight from the pix2pix paper, which is a good read. …

[On edges2cats:] Trained on about 2k stock cat photos and edges automatically generated from those photos. Generates cat-colored objects, some with nightmare faces. The best one I’ve seen yet was a cat-beholder.Some of the pictures look especially creepy, I think because it’s easier to notice when an animal looks wrong, especially around the eyes. The auto-detected edges are not very good and in many cases didn’t detect the cat’s eyes, making it a bit worse for training the image translation model.

You can also try doodles in datasets comprising of shoes, handbags, and building facades.

You can play around with the neural doodle experiments here

Two Minute Papers - Deep Learning Program Learns to Paint

Short video from Károly Zsolnai-Fehér explains simply how neural network artistic style transfer works, including new improved research work on the subject:

Artificial neural networks were inspired by the human brain and simulate how neurons behave when they are shown a sensory input (e.g., images, sounds, etc). They are known to be excellent tools for image recognition, any many other problems beyond that - they also excel at weather predictions, breast cancer cell mitosis detection, brain image segmentation and toxicity prediction among many others. Deep learning means that we use an artificial neural network with multiple layers, making it even more powerful for more difficult tasks. This time they have been shown to be apt at reproducing the artistic style of many famous painters, such as Vincent Van Gogh and Pablo Picasso among many others. All the user needs to do is provide an input photograph and a target image from which the artistic style will be learned.

Link

Capture the Sun, Andrew McIntosh

“Entropic Corporeality” from my #Metamorph show at @krabjabstudio Last day to see it in #seattle tomorrow!

-

satansoldhissoultome reblogged this · 6 years ago

satansoldhissoultome reblogged this · 6 years ago -

satansoldhissoultome liked this · 6 years ago

satansoldhissoultome liked this · 6 years ago -

baodad liked this · 6 years ago

baodad liked this · 6 years ago -

givemyaccoubtbacc liked this · 6 years ago

givemyaccoubtbacc liked this · 6 years ago -

dreamlegend liked this · 6 years ago

dreamlegend liked this · 6 years ago -

theoutsanityshoppe reblogged this · 6 years ago

theoutsanityshoppe reblogged this · 6 years ago -

inkytae liked this · 6 years ago

inkytae liked this · 6 years ago -

capiturecs liked this · 6 years ago

capiturecs liked this · 6 years ago -

existentialvoidofexistence liked this · 6 years ago

existentialvoidofexistence liked this · 6 years ago -

shuhey3ijhsy2wj-blog liked this · 7 years ago

shuhey3ijhsy2wj-blog liked this · 7 years ago -

angelmarred liked this · 7 years ago

angelmarred liked this · 7 years ago -

slightlymisplacednap liked this · 7 years ago

slightlymisplacednap liked this · 7 years ago -

tinyfluffyshark liked this · 7 years ago

tinyfluffyshark liked this · 7 years ago -

bright-trash liked this · 7 years ago

bright-trash liked this · 7 years ago -

heck-me-m8 liked this · 7 years ago

heck-me-m8 liked this · 7 years ago -

bossome117 liked this · 7 years ago

bossome117 liked this · 7 years ago -

gabbiescanlon liked this · 7 years ago

gabbiescanlon liked this · 7 years ago -

albatross-bumblehand liked this · 7 years ago

albatross-bumblehand liked this · 7 years ago -

serotonine-tnt liked this · 7 years ago

serotonine-tnt liked this · 7 years ago -

cozycthulhu liked this · 7 years ago

cozycthulhu liked this · 7 years ago -

crowsmalone liked this · 7 years ago

crowsmalone liked this · 7 years ago -

homocatphenomena reblogged this · 7 years ago

homocatphenomena reblogged this · 7 years ago -

homocatphenomena liked this · 7 years ago

homocatphenomena liked this · 7 years ago -

viciouscirce81 liked this · 7 years ago

viciouscirce81 liked this · 7 years ago -

enderlord1st-blog liked this · 7 years ago

enderlord1st-blog liked this · 7 years ago -

chickennuggetoverlordsthron-blog liked this · 7 years ago

chickennuggetoverlordsthron-blog liked this · 7 years ago -

bigleacey liked this · 7 years ago

bigleacey liked this · 7 years ago -

i-am-the-narwhal liked this · 7 years ago

i-am-the-narwhal liked this · 7 years ago -

blackberryblindside liked this · 7 years ago

blackberryblindside liked this · 7 years ago -

jackel2168-blog reblogged this · 7 years ago

jackel2168-blog reblogged this · 7 years ago -

byamylaurens reblogged this · 7 years ago

byamylaurens reblogged this · 7 years ago -

artsyprick liked this · 7 years ago

artsyprick liked this · 7 years ago -

biuebirdy liked this · 7 years ago

biuebirdy liked this · 7 years ago -

twowanderingfeet reblogged this · 7 years ago

twowanderingfeet reblogged this · 7 years ago -

dr-pepper-tacos-blog liked this · 7 years ago

dr-pepper-tacos-blog liked this · 7 years ago -

pinkbloodymurder-moved liked this · 7 years ago

pinkbloodymurder-moved liked this · 7 years ago -

sodonesofun liked this · 7 years ago

sodonesofun liked this · 7 years ago -

thatonebitchthatyoulike reblogged this · 7 years ago

thatonebitchthatyoulike reblogged this · 7 years ago -

thatonebitchthatyoulike liked this · 7 years ago

thatonebitchthatyoulike liked this · 7 years ago -

snowboundsurfer-blog liked this · 7 years ago

snowboundsurfer-blog liked this · 7 years ago -

unsink-the-titanic liked this · 7 years ago

unsink-the-titanic liked this · 7 years ago -

lovelyenbie liked this · 7 years ago

lovelyenbie liked this · 7 years ago -

fuzzygayghost liked this · 7 years ago

fuzzygayghost liked this · 7 years ago

A simple reblog blog, aimed to support artwork, ideas and other mind candy.

42 posts