Neuroscience - Blog Posts

Starting uni again + honorable mention, my support animals

Dance for Two by Alan Lightman

Being You by Anil Seth

How story, God, and Your Lying Brain Turn Chaos into Order by Nancy Mimeles Carey

Your Brain is a Time Machine by Dean Buonomano

Some of the books I've read recently + the covers

‘Wrinkles’ in time experience linked to heartbeat

How long is the present? The answer, Cornell researchers suggest in a new study, depends on your heart.

They found that our momentary perception of time is not continuous but may stretch or shrink with each heartbeat.

The research builds evidence that the heart is one of the brain’s important timekeepers and plays a fundamental role in our sense of time passing – an idea contemplated since ancient times, said Adam K. Anderson, professor in the Department of Psychology and in the College of Human Ecology (CHE).

“Time is a dimension of the universe and a core basis for our experience of self,” Anderson said. “Our research shows that the moment-to-moment experience of time is synchronized with, and changes with, the length of a heartbeat.”

Saeedeh Sadeghi, M.S. ’19, a doctoral student in the field of psychology, is the lead author of “Wrinkles in Subsecond Time Perception are Synchronized to the Heart,” published in the journal Psychophysiology. Anderson is a co-author with Eve De Rosa, the Mibs Martin Follett Professor in Human Ecology (CHE) and dean of faculty at Cornell, and Marc Wittmann, senior researcher at the Institute for Frontier Areas of Psychology and Mental Health in Germany.

Time perception typically has been tested over longer intervals, when research has shown that thoughts and emotions may distort our sense time, perhaps making it fly or crawl. Sadeghi and Anderson recently reported, for example, that crowding made a simulated train ride seem to pass more slowly.

Such findings, Anderson said, tend to reflect how we think about or estimate time, rather than our direct experience of it in the present moment.

To investigate that more direct experience, the researchers asked if our perception of time is related to physiological rhythms, focusing on natural variability in heart rates. The cardiac pacemaker “ticks” steadily on average, but each interval between beats is a tiny bit longer or shorter than the preceding one, like a second hand clicking at different intervals.

The team harnessed that variability in a novel experiment. Forty-five study participants – ages 18 to 21, with no history of heart trouble – were monitored with electrocardiography, or ECG, measuring heart electrical activity at millisecond resolution. The ECG was linked to a computer, which enabled brief tones lasting 80-180 milliseconds to be triggered by heartbeats. Study participants reported whether tones were longer or shorter relative to others.

The results revealed what the researchers called “temporal wrinkles.” When the heartbeat preceding a tone was shorter, the tone was perceived as longer. When the preceding heartbeat was longer, the sound’s duration seemed shorter.

“These observations systematically demonstrate that the cardiac dynamics, even within a few heartbeats, is related to the temporal decision-making process,” the authors wrote.

The study also showed the brain influencing the heart. After hearing tones, study participants focused attention on the sounds. That “orienting response” changed their heart rate, affecting their experience of time.

“The heartbeat is a rhythm that our brain is using to give us our sense of time passing,” Anderson said. “And that is not linear – it is constantly contracting and expanding.”

The scholars said the connection between time perception and the heart suggests our momentary perception of time is rooted in bioenergetics, helping the brain manage effort and resources based on changing body states including heart rate.

The research shows, Anderson said, that in subsecond intervals too brief for conscious thoughts or feelings, the heart regulates our experience of the present.

“Even at these moment-to-moment intervals, our sense of time is fluctuating,” he said. “A pure influence of the heart, from beat to beat, helps create a sense of time.”

Retracing

Books and bookmarks

“The brain is radically resilient; it can create new neurons and make new connections through cortical remapping, a process called neurogenesis. Our minds have the incredible capacity to both alter the strength of connections among neurons, essentially rewiring them, and create entirely new pathways. (It makes a computer, which cannot create new hardware when its system crashes, seem fixed and helpless.) This amazing malleability is called neuroplasticity. Like daffodils in the early days of spring, my neurons were resprouting receptors as the winter of the illness ebbed.”

— Susannah Cahalan, Brain On Fire

The human brain, it turns out, can be surprisingly resistant to the ravages of time. A new study has cataloged human brains that have been found on the archaeological record around the world and discovered that this remarkable organ resists decomposition far more than we thought – even when the rest of the body's soft tissues have completely melted away.

Continue Reading.

Nighttime brain snacks

A User’s Guide To The Brain

More about the human brain and behaviour on @tobeagenius

Neat!

Horror Movies Manipulate Brain Activity Expertly to Enhance Excitement

Finnish research team maps neural activity in response to watching horror movies. A study conducted by the University of Turku shows the top horror movies of the past 100 years, and how they manipulate brain activity. The findings were published in the journal Neuroimage.

Humans are fascinated by what scares us, be it sky-diving, roller-coasters, or true-crime documentaries – provided these threats are kept at a safe distance. Horror movies are no different.

Whilst all movies have our heroes face some kind of threat to their safety or happiness, horror movies up the ante by having some kind of superhuman or supernatural threat that cannot be reasoned with or fought easily.

The research team at the University of Turku, Finland, studied why we are drawn to such things as entertainment? The researchers first established the 100 best and scariest horror movies of the past century (Table 1), and how they made people feel.

Unseen Threats Are Most Scary

Firstly, 72% of people report watching at last one horror movie every 6 months, and the reasons for doing so, besides the feelings of fear and anxiety, was primarily that of excitement. Watching horror movies was also an excuse to socialise, with many people preferring to watch horror movies with others than on their own.

People found horror that was psychological in nature and based on real events the scariest, and were far more scared by things that were unseen or implied rather than what they could actually see.

Table 1. Top ten scariest movies of the past century.

– This latter distinction reflects two types of fear that people experience. The creeping foreboding dread that occurs when one feels that something isn’t quite right, and the instinctive response we have to the sudden appearance of a monster that make us jump out of our skin, says principal investigator, Professor Lauri Nummenmaa from Turku PET Centre.

MRI Reveals How Brain Reacts to Different Forms of Fear

Researchers wanted to know how the brain copes with fear in response to this complicated and ever changing environment. The group had people watch a horror movie whilst measuring neural activity in a magnetic resonance imaging scanner.

During those times when anxiety is slowly increasing, regions of the brain involved in visual and auditory perception become more active, as the need to attend for cues of threat in the environment become more important. After a sudden shock, brain activity is more evident in regions involved in emotion processing, threat evaluation, and decision making, enabling a rapid response.

(Image caption: Brain regions active during periods of impending dread (top row) and in response to sudden jump-scares (bottom))

However, these regions are in continuous talk-back with sensory regions throughout the movie, as if the sensory regions were preparing response networks as a scary event was becoming increasingly likely.

– Therefore, our brains are continuously anticipating and preparing us for action in response to threat, and horror movies exploit this expertly to enhance our excitement, explains Researcher Matthew Hudson.

Since I get asked a lot about where to learn more about the human brain and behaviour, I’ve made a masterpost of books, websites, videos and online courses to introduce yourself to that piece of matter that sits between your ears.

Books

The Brain Book by Rita Carter

The Pyschology Book (a good starter book) by DK

Thinking, Fast and Slow by Daniel Kahneman

Quiet: The Power of Introverts in a World That Can’t Stop Talking by Susan Cain

The Man Who Mistook His Wife for a Hat by Oliver Sacks

The Brain: The Story of You by David Eagleman

The Brain That Changes Itself: Stories of Personal Triumph from the Frontiers of Brain Science by Norman Doidge

This Is Your Brain on Music by Daniel Levitin

The Autistic Brain by Richard Panek and Temple Grandin (highly reccomended)

Sapiens: A Brief History of Humankind by Yuval Noah Harari (not really brain-related, but it is single handedly the best book I have ever read)

Websites

@tobeagenius (shameless self-promotion)

How Stuff Works

Psych2Go

BrainFacts

Neuroscience for Kids (aimed at kids, but it has some good info)

New Scientist

National Geographic

Live Science

Videos & Youtube Channels

Mind Matters series by TedEd

Crash Course Psychology

SciShow Brain

Psych2Go TV

asapSCIENCE

Brain Craft

Its Okay To Be Smart

Online Courses

The Addicted Brain

Visual Perception and The Brain

Understanding the Brain: The Neurobiology of Everyday Life

Pyschology Of Popularity

Harvard Fundamentals Of Neuroscience

as a neuroscience student i get so offended when people act like ai has any intelligence whatsoever

thinking we can make something fast and complex as even a fruit fly’s brain is the height of human hubris

apologize to nature RIGHT NOW

your conscious movements have already been planned by your brain milliseconds before you decided to make them :)

they had people make random arm movements and report when exactly they decided to make them, all while recording their brain activity

i think this says some really neat stuff about how we view consciousness. esp in joint with the fact that damaging certain areas of your brain can change your personality fundamentally

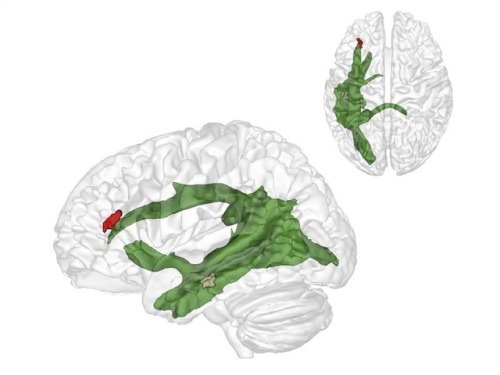

(Image caption: The maturation of fibres of a brain structure called the arcuate fascicle (green) between the ages of three and four years establishes a connection between two critical brain regions: a region (brown) at the back of the temporal lobe that supports adults thinking about others and their thoughts, and a region (red) in the frontal lobe that is involved in keeping things at different levels of abstraction and, therefore, helps us to understand what the real world is and what the thoughts of others are. Credit: © MPI CBS)

The importance of relating to others: why we only learn to understand other people after the age of four

When we are around four years old we suddenly start to understand that other people think and that their view of the world is often different from our own. Researchers in Leiden and Leipzig have explored how that works. Publication in Nature Communications on 21 March.

At around the age of four we suddenly do what three-year-olds are unable to do: put ourselves in someone else’s shoes. Researchers at the Max Planck Institute for Human Cognitive and Brain Sciences (MPI CBS) in Leipzig and at Leiden University have shown how this enormous developmental step occurs: a critical fibre connection in the brain matures. Senior researcher and Leiden developmental psychologist Nikolaus Steinbeis, co-author of the article, took part in the research. Lead author, PhD candidate Charlotte Grosse-Wiesmann, worked under his supervision.

Little Maxi

If you tell a 3-year-old child the following story of little Maxi, they will most probably not understand: Maxi puts his chocolate on the kitchen table, then goes to play outside. While he is gone, his mother puts the chocolate in the cupboard. Where will Maxi look for his chocolate when he comes back? A 3-year-old child will not understand why Maxi would be surprised not to find the chocolate on the table where he left it. It is only by the age of 4 years that a child will correctly predict that Maxi will look for his chocolate where he left it and not in the cupboard where it is now.

Theory of Mind

The researchers observed something similar when they showed a 3-year-old child a chocolate box that contained pencils instead of chocolates. When the child was asked what another child would expect to be in the box, they answered “pencils”, although the other child would not know this. Only a year later, around the age of four years, however, will they understand that the other child had hoped for chocolates. Thus, there is a crucial developmental breakthrough between three and four years: this is when we start to attribute thoughts and beliefs to others and to understand that their beliefs can be different from ours. Before that age, thoughts don’t seem to exist independently of what we see and know about the world. That is, this is when we develop a Theory of Mind.

Independent development

The researchers have now discovered what is behind this breakthrough. The maturation of fibres of a brain structure called the arcuate fascicle between the ages of three and four years establishes a connection between two critical brain regions: a region at the back of the temporal lobe that supports adult thinking about others and their thoughts, and a region in the frontal lobe that is involved in keeping things at different levels of abstraction and, therefore, helps us to understand what the real world is and what the thoughts of others are. Only when these two brain regions are connected through the arcuate fascicle can children start to understand what other people think. This is what allows us to predict where Maxi will look for his chocolate. Interestingly, this new connection in the brain supports this ability independently of other cognitive abilities, such as intelligence, language ability or impulse control.

Man with quadriplegia employs injury bridging technologies to move again—just by thinking

First recipient of implanted brain-recording and muscle-stimulating systems reanimates limb that had been stilled for eight years

Bill Kochevar grabbed a mug of water, drew it to his lips and drank through the straw.

His motions were slow and deliberate, but then Kochevar hadn’t moved his right arm or hand for eight years.

And it took some practice to reach and grasp just by thinking about it.

Kochevar, who was paralyzed below his shoulders in a bicycling accident, is believed to be the first person with quadriplegia in the world to have arm and hand movements restored with the help of two temporarily implanted technologies.

A brain-computer interface with recording electrodes under his skull, and a functional electrical stimulation (FES) system* activating his arm and hand, reconnect his brain to paralyzed muscles.

Holding a makeshift handle pierced through a dry sponge, Kochevar scratched the side of his nose with the sponge. He scooped forkfuls of mashed potatoes from a bowl—perhaps his top goal—and savored each mouthful.

“For somebody who’s been injured eight years and couldn’t move, being able to move just that little bit is awesome to me,” said Kochevar, 56, of Cleveland. “It’s better than I thought it would be.”

Kochevar is the focal point of research led by Case Western Reserve University, the Cleveland Functional Electrical Stimulation (FES) Center at the Louis Stokes Cleveland VA Medical Center and University Hospitals Cleveland Medical Center (UH). A study of the work was published in the The Lancet March 28 at 6:30 p.m. U.S. Eastern time.

“He’s really breaking ground for the spinal cord injury community,” said Bob Kirsch, chair of Case Western Reserve’s Department of Biomedical Engineering, executive director of the FES Center and principal investigator (PI) and senior author of the research. “This is a major step toward restoring some independence.”

When asked, people with quadriplegia say their first priority is to scratch an itch, feed themselves or perform other simple functions with their arm and hand, instead of relying on caregivers.

“By taking the brain signals generated when Bill attempts to move, and using them to control the stimulation of his arm and hand, he was able to perform personal functions that were important to him,” said Bolu Ajiboye, assistant professor of biomedical engineering and lead study author.

Technology and training

The research with Kochevar is part of the ongoing BrainGate2* pilot clinical trial being conducted by a consortium of academic and VA institutions assessing the safety and feasibility of the implanted brain-computer interface (BCI) system in people with paralysis. Other investigational BrainGate research has shown that people with paralysis can control a cursor on a computer screen or a robotic arm (braingate.org).

“Every day, most of us take for granted that when we will to move, we can move any part of our body with precision and control in multiple directions and those with traumatic spinal cord injury or any other form of paralysis cannot,” said Benjamin Walter, associate professor of neurology at Case Western Reserve School of Medicine, clinical PI of the Cleveland BrainGate2 trial and medical director of the Deep Brain Stimulation Program at UH Cleveland Medical Center.

“The ultimate hope of any of these individuals is to restore this function,” Walter said. “By restoring the communication of the will to move from the brain directly to the body this work will hopefully begin to restore the hope of millions of paralyzed individuals that someday they will be able to move freely again.”

Jonathan Miller, assistant professor of neurosurgery at Case Western Reserve School of Medicine and director of the Functional and Restorative Neurosurgery Center at UH, led a team of surgeons who implanted two 96-channel electrode arrays—each about the size of a baby aspirin—in Kochevar’s motor cortex, on the surface of the brain.

The arrays record brain signals created when Kochevar imagines movement of his own arm and hand. The brain-computer interface extracts information from the brain signals about what movements he intends to make, then passes the information to command the electrical stimulation system.

To prepare him to use his arm again, Kochevar first learned how to use his brain signals to move a virtual-reality arm on a computer screen.

“He was able to do it within a few minutes,” Kirsch said. “The code was still in his brain.”

As Kochevar’s ability to move the virtual arm improved through four months of training, the researchers believed he would be capable of controlling his own arm and hand.

Miller then led a team that implanted the FES systems’ 36 electrodes that animate muscles in the upper and lower arm.

The BCI decodes the recorded brain signals into the intended movement command, which is then converted by the FES system into patterns of electrical pulses.

The pulses sent through the FES electrodes trigger the muscles controlling Kochevar’s hand, wrist, arm, elbow and shoulder. To overcome gravity that would otherwise prevent him from raising his arm and reaching, Kochevar uses a mobile arm support, which is also under his brain’s control.

New Capabilities

Eight years of muscle atrophy required rehabilitation. The researchers exercised Kochevar’s arm and hand with cyclical electrical stimulation patterns. Over 45 weeks, his strength, range of motion and endurance improved. As he practiced movements, the researchers adjusted stimulation patterns to further his abilities.

Kochevar can make each joint in his right arm move individually. Or, just by thinking about a task such as feeding himself or getting a drink, the muscles are activated in a coordinated fashion.

When asked to describe how he commanded the arm movements, Kochevar told investigators, “I’m making it move without having to really concentrate hard at it…I just think ‘out’…and it goes.”

Kocehvar is fitted with temporarily implanted FES technology that has a track record of reliable use in people. The BCI and FES system together represent early feasibility that gives the research team insights into the potential future benefit of the combined system.

Advances needed to make the combined technology usable outside of a lab are not far from reality, the researchers say. Work is underway to make the brain implant wireless, and the investigators are improving decoding and stimulation patterns needed to make movements more precise. Fully implantable FES systems have already been developed and are also being tested in separate clinical research.

Kochevar welcomes new technology—even if it requires more surgery—that will enable him to move better. “This won’t replace caregivers,” he said. “But, in the long term, people will be able, in a limited way, to do more for themselves.”

The investigational BrainGate technology was initially developed in the Brown University laboratory of John Donoghue, now the founding director of the Wyss Center for Bio and Neuroengineering in Geneva, Switzerland. The implanted recording electrodes are known as the Utah array, originally designed by Richard Normann, Emeritus Distinguished Professor of Bioengineering at the University of Utah.

The report in Lancet is the result of a long-running collaboration between Kirsch, Ajiboye and the multi-institutional BrainGate consortium. Leigh Hochberg, a neurologist and neuroengineer at Massachusetts General Hospital, Brown University and the VA RR&D Center for Neurorestoration and Neurotechnology in Providence, Rhode Island, directs the pilot clinical trial of the BrainGate system and is a study co-author.

“It’s been so inspiring to watch Mr. Kochevar move his own arm and hand just by thinking about it,” Hochberg said. “As an extraordinary participant in this research, he’s teaching us how to design a new generation of neurotechnologies that we all hope will one day restore mobility and independence for people with paralysis.”

The late effects of stress: New insights into how the brain responds to trauma

Mrs. M would never forget that day. She was walking along a busy road next to the vegetable market when two goons zipped past on a bike. One man’s hand shot out and grabbed the chain around her neck. The next instant, she had stumbled to her knees, and was dragged along in the wake of the bike. Thankfully, the chain snapped, and she got away with a mildly bruised neck. Though dazed by the incident, Mrs. M was fine until a week after the incident.

Then, the nightmares began.

She would struggle and yell and fight in her sleep every night with phantom chain snatchers. Every bout left her charged with anger and often left her depressed. The episodes continued for several months until they finally stopped. How could a single stressful event have such extended consequences?

A new study by Indian scientists has gained insights into how a single instance of severe stress can lead to delayed and long-term psychological trauma. The work pinpoints key molecular and physiological processes that could be driving changes in brain architecture.

The team, led by Sumantra Chattarji from the National Centre for Biological Sciences (NCBS) and the Institute for Stem Cell Biology and Regenerative Medicine (inStem), Bangalore, have shown that a single stressful incident can lead to increased electrical activity in a brain region known as the amygdala. This activity sets in late, occurring ten days after a single stressful episode, and is dependent on a molecule known as the N-Methyl-D-Aspartate Receptor (NMDA-R), an ion channel protein on nerve cells known to be crucial for memory functions.

The amygdala is a small, almond-shaped groups of nerve cells that is located deep within the temporal lobe of the brain. This region of the brain is known to play key roles in emotional reactions, memory and making decisions. Changes in the amygdala are linked to the development of Post-Traumatic Stress Disorder (PTSD), a mental condition that develops in a delayed fashion after a harrowing experience.

Previously, Chattarji’s group had shown that a single instance of acute stress had no immediate effects on the amygdala of rats. But ten days later, these animals began to show increased anxiety, and delayed changes in the architecture of their brains, especially the amygdala.

“We showed that our study system is applicable to PTSD. This delayed effect after a single episode of stress was reminiscent of what happens in PTSD patients,” says Chattarji. “We know that the amygdala is hyperactive in PTSD patients. But no one knows as of now, what is going on in there,” he adds.

Investigations revealed major changes in the microscopic structure of the nerve cells in the amygdala. Stress seems to have caused the formation of new nerve connections called synapses in this region of the brain. However, until now, the physiological effects of these new connections were unknown.

In their recent study, Chattarji’s team has established that the new nerve connections in the amygdala lead to heightened electrical activity in this region of the brain.

“Most studies on stress are done on a chronic stress paradigm with repeated stress, or with a single stress episode where changes are looked at immediately afterwards – like a day after the stress,” says Farhana Yasmin, one of the Chattarji’s students. “So, our work is unique in that we show a reaction to a single instance of stress, but at a delayed time point,” she adds.

Furthermore, a well-known protein involved in memory and learning, called NMDA-R has been recognised as one of the agents that bring about these changes. Blocking the NMDA-R during the stressful period not only stopped the formation of new synapses, it also blocked the increase in electrical activity at these synapses.

“So we have for the first time, a molecular mechanism that shows what is required for the culmination of events ten days after a single stress,” says Chattarji. “In this study, we have blocked the NMDA Receptor during stress. But we would like to know if blocking the molecule after stress can also block the delayed effects of the stress. And if so, how long after the stress can we block the receptor to define a window for therapy,” he adds.

Chattarji’s group first began their investigations into how stress affects the amygdala and other regions of the brain around ten years ago. The work has required the team to employ an array of highly specialised and diverse procedures that range from observing behaviour to recording electrical signals from single brain cells and using an assortment of microscopy techniques. “To do this, we have needed to use a variety of techniques, for which we required collaborations with people who have expertise in such techniques,” says Chattarji. “And the glue for such collaborations especially in terms of training is vital. We are very grateful to the Wadhwani Foundation that supports our collaborative efforts and to the DBT and DAE for funding this work,” he adds.

Paralyzed ALS patient operates speech computer with her mind

In the UMC Utrecht a brain implant has been placed in a patient enabling her to operate a speech computer with her mind. The researchers and the patient worked intensively to get the settings right. She can now communicate at home with her family and caregivers via the implant. That a patient can use this technique at home is unique in the world. This research was published in the New England Journal of Medicine.

Because she suffers from ALS disease, the patient is no longer able to move and speak. Doctors placed electrodes in her brain, and the electrodes pick up brain activity. This enables her to wirelessly control a speech computer that she now uses at home.

Mouse click

The patient operates the speech computer by moving her fingers in her mind. This changes the brain signal under the electrodes. That change is converted into a mouse click. On a screen in front of her she can see the alphabet, plus some additional functions such as deleting a letter or word and selecting words based on the letters she has already spelled. The letters on the screen light up one by one. She selects a letter by influencing the mouse click at the right moment with her brain. That way she can compose words, letter by letter, which are then spoken by the speech computer. This technique is comparable to actuating a speech computer via a push-button (with a muscle that can still function, for example, in the neck or hand). So now, if a patient lacks muscle activity, a brain signal can be used instead.

Wireless

The patient underwent surgery during which electrodes were placed on her brain through tiny holes in her skull. A small transmitter was then placed in her body below her collarbone. This transmitter receives the signals from the electrodes via subcutaneous wires, amplifies them and transmits them wirelessly. The mouse click is calculated from these signals, actuating the speech computer. The patient is closely supervised. Shortly after the operation, she started on a journey of discovery together with the researchers to find the right settings for the device and the perfect way to get her brain activity under control. It started with a “simple” game to practice the art of clicking. Once she mastered clicking, she focused on the speech computer. She can now use the speech computer without the help of the research team.

The UMC Utrecht Brain Center has spent many years researching the possibility of controlling a computer by means of electrodes that capture brain activity. Working with a speech computer driven by brain signals measured with a bathing cap with electrodes has long been tested in various research laboratories. That a patient can use the technique at home, through invisible, implanted electrodes, is unique in the world.

If the implant proves to work well in three people, the researchers hope to launch a larger, international trial. Ramsey: “We hope that these results will stimulate research into more advanced implants, so that some day not only people with communication problems, but also people with paraplegia, for example, can be helped.”

Can the brain feel it? The world’s smallest extracellular needle-electrodes

A research team in the Department of Electrical and Electronic Information Engineering and the Electronics-Inspired Interdisciplinary Research Institute (EIIRIS) at Toyohashi University of Technology developed 5-μm-diameter needle-electrodes on 1 mm × 1 mm block modules. This tiny needle may help solve the mysteries of the brain and facilitate the development of a brain-machine interface. The research results were reported in Scientific Reports on Oct 25, 2016.

(Image caption: Extracellular needle-electrode with a diameter of 5 μm mounted on a connector)

The neuron networks in the human brain are extremely complex. Microfabricated silicon needle-electrode devices were expected to be an innovation that would be able to record and analyze the electrical activities of the microscale neuronal circuits in the brain.

However, smaller needle technologies (e.g., needle diameter < 10 μm) are necessary to reduce damage to brain tissue. In addition to the needle geometry, the device substrate should be minimized not only to reduce the total amount of damage to tissue but also to enhance the accessibility of the electrode in the brain. Thus, these electrode technologies will realize new experimental neurophysiological concepts.

A research team in the Department of Electrical and Electronic Information Engineering and the EIIRIS at Toyohashi University of Technology developed 5- μm-diameter needle-electrodes on 1 mm × 1 mm block modules.

The individual microneedles are fabricated on the block modules, which are small enough to use in the narrow spaces present in brain tissue; as demonstrated in the recording using mouse cerebrum cortices. In addition, the block module remarkably improves the design variability in the packaging, offering numerous in vivo recording applications.

“We demonstrated the high design variability in the packaging of our electrode device, and in vivo neuronal recordings were performed by simply placing the device on a mouse’s brain. We were very surprised that high quality signals of a single unit were stably recorded over a long period using the 5-μm-diameter needle,” explained the first author, Assistant Professor Hirohito Sawahata, and co-author, researcher Shota Yamagiwa.

The leader of the research team, Associate Professor Takeshi Kawano said: “Our silicon needle technology offers low invasive neuronal recordings and provides novel methodologies for electrophysiology; therefore, it has the potential to enhance experimental neuroscience.” He added, “We expect the development of applications to solve the mysteries of the brain and the development of brain–machine interfaces.”

“Brainprint” Biometric ID Hits 100% Accuracy

Psychologists and engineers at Binghamton University in New York say they’ve hit a milestone in the quest to use the unassailable inner workings of the mind as a form of biometric identification. They came up with an electroencephalograph system that proved 100 percent accurate at identifying individuals by the way their brains responded to a series of images. But EEG as a practical means of authentication is still far off.

Many earlier attempts had come close to 100 percent accuracy but couldn’t completely close the gap. “It’s a big deal going from 97 to 100 percent because we imagine the applications for this technology being for high-security situations,” says Sarah Laszlo, the assistant professor of psychology at Binghamton who led the research with electrical engineering professor Zhanpeng Jin.

Perhaps as important as perfect accuracy is that this new form of ID can do something fingerprints and retinal scans have a hard time achieving: It can be “canceled.”

Fingerprint authentication can be reset if the associated data is stolen, because that data can be stored as a mathematically transformed version of itself, points out Clarkson University biometrics expert Stephanie Schuckers. However, that trick doesn’t work if it’s the fingerprint (or the finger) itself that’s stolen. And the theft part, at least, is easier than ever. In 2014 hackers claimed to have cloned German defense minister Ursula von der Leyen’s fingerprints just by taking a high-definition photo of her hands at a public event.

Several early attempts at EEG-based identification sought the equivalent of a fingerprint in the electrical activity of a brain at rest. But this new brain biometric, which its inventors call CEREBRE, dodges the cancelability problem because it’s based on the brain’s responses to a sequence of particular types of images. To keep that ID from being permanently hijacked, those images can be changed or re-sorted to essentially make a new biometric passkey, should the original one somehow be hacked.

CEREBRE, which Laszlo, Jin, and colleagues described in IEEE Transactions in Information Forensics and Security, involves presenting a person wearing an EEG system with images that fall into several categories: foods people feel strongly about, celebrities who also evoke emotions, simple sine waves of different frequencies, and uncommon words. The words and images are usually black and white, but occasionally one is presented in color because that produces its own kind of response.

Each image causes a recognizable change in voltage at the scalp called an event-related potential, or ERP. The different categories of images involve somewhat different combinations of parts of your brain, and they were already known to produce slight differences in the shapes of ERPs in different people. Laszlo’s hypothesis was that using all of them—several more than any other system—would create enough different ERPs to accurately distinguish one person from another.

The EEG responses were fed to software called a classifier. After testing several schemes, including a variety of neural networks and other machine-learning tricks, the engineers found that what actually worked best was a system based on simple cross correlation.

In the experiments, each of the 50 test subjects saw a sequence of 500 images, each flashed for 1 second. “We collected 500, knowing it was overkill,” Laszlo says. Once the researchers crunched the data they found that just 27 images would have been enough to hit the 100 percent mark.

The experiments were done with a high-quality research-grade EEG, which used 30 electrodes attached to the skull with conductive goop. However, the data showed that the system needs only three electrodes for 100 percent identification, and Laszlo says her group is working on simplifying the setup. They’re testing consumer EEG gear from Emotiv and NeuroSky, and they’ve even tried to replicate the work with electrodes embedded in a Google Glass, though the results weren’t spectacular, she says.

For EEG to really be taken seriously as a biometric ID, brain interfaces will need to be pretty commonplace, says Schuckers. That might yet happen. “As we go more and more into wearables as a standard part of our lives, [EEGs] might be more suitable,” she says.

But like any security system, even an EEG biometric will attract hackers. How can you hack something that depends on your thought patterns? One way, explains Laszlo, is to train a hacker’s brain to mimic the right responses. That would involve flashing light into a hacker’s eye at precise times while the person is observing the images. These flashes are known to alter the shape of the ERP.

(Image caption: If this picture makes you feel uncomfortable, you feel empathic pain. This sensation activates the same brain regions as real pain. © Kai Weinsziehr for MPG)

The anatomy of pain

Grimacing, we flinch when we see someone accidentally hit their thumb with a hammer. But is it really pain we feel? Researchers at the Max Planck Institute for Human Cognitive and Brain Sciences in Leipzig and other institutions have now proposed a new theory that describes pain as a multi-layered gradual event which consists of specific pain components, such as a burning sensation in the hand, and more general components, such as negative emotions. A comparison of the brain activation patterns during both experiences could clarify which components the empathic response shares with real pain.

Imagine you’re driving a nail into a wall with a hammer and accidentally bang your finger. You would probably injure finger tissue, feel physical distress, focus all your attention on your injured finger and take care not to repeat the misfortune. All this describes physical and psychological manifestations of “pain” – specifically, so-called nociceptive pain experienced by your body, which is caused by the stimulation of pain receptors.

Now imagine that you see a friend injure him or herself in the same way. You would again literally wince and feel pain, empathetic pain in this case. Although you yourself have not sustained any injury, to some extent you would experience the same symptoms: You would feel anxiety; you may recoil to put distance between yourself and the source of the pain; and you would store information about the context of the experience in order to avoid pain in the future.

Activity in the brain

Previous studies have shown that the same brain structures – namely the anterior insula and the cingulate cortex – are activated, irrespective of whether the pain is personally experienced or empathetic. However, despite this congruence in the underlying activated areas of the brain, the extent to which the two forms of pain really are similar remains a matter of considerable controversy.

To help shed light on the matter, neuroscientists, including Tania Singer, Director at the Max Planck Institute for Human Cognitive and Brain Sciences in Leipzig, have now proposed a new theory: “We need to get away from this either-or question, whether the pain is genuine or not.”

Instead, it should be seen as a complex interaction of multiple elements, which together form the complex experience we call “pain”. The elements include sensory processes, which determine, for example, where the pain stimulus was triggered: in the hand or in the foot? In addition, emotional processes, such as the negative feeling experienced during pain, also come into play. “The decisive point is that the individual processes can also play a role in other experiences, albeit in a different activation pattern,” Singer explains – for example, if someone tickles your hand or foot, or you see images of people suffering on television. Other processes, such as the stimulation of pain receptors, are probably highly specific to pain. The neuroscientists therefore propose comparing the elements of direct and empathetic pain: Which elements are shared and which, by contrast, are specific and unique to the each form of pain?

Areas process general components

A study that was published almost simultaneously by scientists from the Max Planck Institute for Human Cognitive and Brain Sciences and the University of Geneva has provided strong proof of this theory: They were able to demonstrate for the first time that during painful experiences the anterior insula region and the cingulate cortex process both general components, which also occur during other negative experiences such as disgust or indignation, and specific pain information – whether the pain is direct or empathic.

The general components signal that an experience is in fact unpleasant and not joyful. The specific information, in turn, tells us that pain – not disgust or indignation – is involved, and whether the pain is being experienced by you or someone else. “Both the nonspecific and the specific information are processed in parallel in the brain structures responsible for pain. But the activation patterns are different,” says Anita Tusche, also a neuroscientist at the Max Planck Institute in Leipzig and one of the authors of the study.

Thanks to the fact that our brain deals with these components in parallel, we can process various unpleasant experiences in a time-saving and energy-saving manner. At the same time, however, we are able register detailed information quickly, so that we know exactly what kind of unpleasant event has occurred – and whether it affects us directly or vicariously. “The fact that our brain processes pain and other unpleasant events simultaneously for the most part, no matter if they are experienced by us or someone else, is very important for social interactions,” Tusche says, “because it helps to us understand what others are experiencing.”

Sleep suppresses brain rebalancing

Why humans and other animals sleep is one of the remaining deep mysteries of physiology. One prominent theory in neuroscience is that sleep is when the brain replays memories “offline” to better encode them (“memory consolidation”). A prominent and competing theory is that sleep is important for re-balancing activity in brain networks that have been perturbed during learning while awake. Such “rebalancing” of brain activity involves homeostatic plasticity mechanisms that were first discovered at Brandeis University, and have been thoroughly studied by a number of Brandeis labs including the Turrigiano lab. Now, a study from the Turrigiano lab just published in the journal Cell shows that these homeostatic mechanisms are indeed gated by sleep and wake, but in the opposite direction from that theorized previously: homeostatic brain rebalancing occurs exclusively when animals are awake, and is suppressed by sleep. These findings raise the intriguing possibility that different forms of brain plasticity – for example those involved in memory consolidation and those involved in homeostatic rebalancing – must be temporally segregated from each other to prevent interference.

The requirement that neurons carefully maintain an average firing rate, much like the thermostat in a house senses and maintains temperature, has long been suggested by computational work. Without homeostatic (“thermostat-like”) control of firing rates, models of neural networks cannot learn and drift into states of epilepsy-like saturation or complete quiescence. Much of the work in discovering and describing candidate mechanisms continues to be conducted at Brandeis. In 2013, the Turrigiano Lab provided the first in vivo evidence for firing rate homeostasis in the mammalian brain: lab members recorded the activity of individual neurons in the visual cortex of freely behaving rat pups for 8h per day across a nine-day period during which vision through one eye was occluded. The activity of neurons initially dropped, but over the next 4 days, firing rates came back to basal levels despite the visual occlusion. In essence, these experiments confirmed what had long been suspected – the activity of neurons in intact brains is indeed homeostatically governed.

Due to the unique opportunity to study a fundamental mechanism of brain plasticity in an unrestrained animal, the lab has been probing the possibility of an intersection between an animal’s behavior and homeostatic plasticity. In order to truly evaluate possible circadian and behavioral influences on neuronal homeostasis, it was necessary to capture the entire 9-day experiment, rather than evaluate snapshots of each day. For this work, the Turrigiano Lab had to find creative computational solutions to recording many terabytes of data necessary to follow the activity of single neurons without interruption for more than 200 hours. Ultimately, these data revealed that the homeostatic regulation of neuronal activity in the cortex is gated by sleep and wake states. In a surprising and unpredicted twist, the homeostatic recovery of activity occurred almost exclusively during periods of activity and was inhibited during sleep. Prior predictions either assumed no role for behavioral state, or that sleeping would account for homeostasis. Finally, the lab established evidence for a causal role for active waking by artificially enhancing natural waking periods during the homeostatic rebound. When animals were kept awake, homeostatic plasticity was further enhanced.

This finding opens doors onto a new field of understanding the behavioral, environmental, and circadian influences on homeostatic plasticity mechanisms in the brain. Some of the key questions that immediately beg to be answered include:

What it is about sleep that precludes the expression of homeostatic plasticity?

How is it possible that mechanisms requiring complex patterns of transcription, translation, trafficking, and modification can be modulated on the short timescales of behavioral state-transitions in rodents?

And finally, how generalizable is this finding? As homeostasis is bidirectional, does a shift in the opposite direction similarly require wake or does the change in sign allow for new rules in expression?

Researchers can identify you by your brain waves with 100 percent accuracy

Your responses to certain stimuli – foods, celebrities, words – might seem trivial, but they say a lot about you. In fact (with the proper clearance), these responses could gain you access into restricted areas of the Pentagon.

A team of researchers at Binghamton University, led by Assistant Professor of Psychology Sarah Laszlo and Assistant Professor of Electrical and Computer Engineering Zhanpeng Jin, recorded the brain activity of 50 people wearing an electroencephalogram headset while they looked at a series of 500 images designed specifically to elicit unique responses from person to person – e.g., a slice of pizza, a boat, Anne Hathaway, the word “conundrum.” They found that participants’ brains reacted differently to each image, enough that a computer system was able to identify each volunteer’s “brainprint” with 100 percent accuracy.

“When you take hundreds of these images, where every person is going to feel differently about each individual one, then you can be really accurate in identifying which person it was who looked at them just by their brain activity,” said Laszlo.

In their original study, titled “Brainprint,” published in 2015 in

Neurocomputing

, the research team was able to identify one person out of a group of 32 by that person’s responses, with only 97 percent accuracy, and that study only incorporated words, not images

Maria V. Ruiz-Blondet, Zhanpeng Jin, Sarah Laszlo. CEREBRE: A Novel Method for Very High Accuracy Event-Related Potential Biometric Identification. IEEE Transactions on Information Forensics and Security, 2016; 11 (7): 1618 DOI: 10.1109/TIFS.2016.2543524

Woman wearing an EEG headset.Credit: Jonathan Cohen/Binghamton University

Complex learning dismantles barriers in the brain

Biology lessons teach us that the brain is divided into separate areas, each of which processes a specific sense. But findings published in eLife show we can supercharge it to be more flexible.

Scientists at the Jagiellonian University in Poland taught Braille to sighted individuals and found that learning such a complex tactile task activates the visual cortex, when you’d only expect it to activate the tactile one.

“The textbooks tell us that the visual cortex processes visual tasks while the tactile cortex, called the somatosensory cortex, processes tasks related to touch,” says lead author Marcin Szwed from Jagiellonian University.

“Our findings tear up that view, showing we can establish new connections if we undertake a complex enough task and are given long enough to learn it.”

The findings could have implications for our power to bend different sections of the brain to our will by learning other demanding skills, such as playing a musical instrument or learning to drive. The flexibility occurs because the brain overcomes the normal division of labour and establishes new connections to boost its power.

It was already known that the brain can reorganize after a massive injury or as a result of massive sensory deprivation such as blindness. The visual cortex of the blind, deprived of its input, adapts for other tasks such as speech, memory, and reading Braille by touch. There has been speculation that this might also be possible in the normal, adult brain, but there has been no conclusive evidence.

“For the first time we’re able to show that large-scale reorganization is a viable mechanism that the sighted, adult brain is able to recruit when it is sufficiently challenged,” says Szwed.

Over nine months, 29 volunteers were taught to read Braille while blindfolded. They achieved reading speeds of between 0 and 17 words per minute. Before and after the course, they took part in a functional Magnetic Resonance Imaging (fMRI) experiment to test the impact of their learning on regions of the brain. This revealed that following the course, areas of the visual cortex, particularly the Visual Word Form Area, were activated and that connections with the tactile cortex were established.

In an additional experiment using transcranial magnetic stimulation, scientists applied magnetic field from a coil to selectively suppress the Visual Word Form Area in the brains of nine volunteers. This impaired their ability to read Braille, confirming the role of this site for the task. The results also discount the hypothesis that the visual cortex could have just been activated because volunteers used their imaginations to picture Braille dots.

“We are all capable of retuning our brains if we’re prepared to put the work in,” says Szwed.

He asserts that the findings call for a reassessment of our view of the functional organization of the human brain, which is more flexible than the brains of other primates.

“The extra flexibility that we have uncovered might be one those features that made us human, and allowed us to create a sophisticated culture, with pianos and Braille alphabet,” he says.

Look! A scientist who says more scrutiny is needed! Yea!

AND - “Don’t try this at home!”

Cadaver study casts doubts on how zapping brain may boost mood, relieve pain

Earlier this month, György Buzsáki of New York University (NYU) in New York City showed a slide that sent a murmur through an audience in the Grand Ballroom of New York’s Midtown Hilton during the annual meeting of the Cognitive Neuroscience Society. It wasn’t just the grisly image of a human cadaver with more than 200 electrodes inserted into its brain that set people whispering; it was what those electrodes detected—or rather, what they failed to detect.

When Buzsáki and his colleague, Antal Berényi, of the University of Szeged in Hungary, mimicked an increasingly popular form of brain stimulation by applying alternating electrical current to the outside of the cadaver’s skull, the electrodes inside registered little. Hardly any current entered the brain. On closer study, the pair discovered that up to 90% of the current had been redirected by the skin covering the skull, which acted as a “shunt,” Buzsáki said.

The new, unpublished cadaver data make dramatic effects on neurons unlikely, Buzsáki says. Most tDCS and tACS devices deliver about 1 to 2 milliamps of current. Yet based on measurements from electrodes inside multiple cadavers, Buzsaki calculated that at least 4 milliamps—roughly equivalent to the discharge of a stun gun—would be necessary to stimulate the firing of living neurons inside the skull. Buzsáki notes he got dizzy when he tried 5 milliamps on his own scalp. “It was alarming,” he says, warning people not to try such intense stimulation at home.

Buzsáki expects a living person’s skin would shunt even more current away from the brain because it is better hydrated than a cadaver’s scalp. He agrees, however, that low levels of stimulation may have subtle effects on the brain that fall short of triggering neurons to fire. Electrical stimulation might also affect glia, brain cells that provide neurons with nutrients, oxygen, and protection from pathogens, and also can influence the brain’s electrical activity. “Further questions should be asked” about whether 1- to 2-milliamp currents affect those cells, he says.

Buzsáki, who still hopes to use such techniques to enhance memory, is more restrained than some critics. The tDCS field is “a sea of bullshit and bad science—and I say that as someone who has contributed some of the papers that have put gas in the tDCS tank,” says neuroscientist Vincent Walsh of University College London. “It really needs to be put under scrutiny like this.”

Thorny life of new-born neurons

Even in adult brains, new neurons are generated throughout a lifetime. In a publication in the scientific journal PNAS, a research group led by Goethe University describes plastic changes of adult-born neurons in the hippocampus, a critical region for learning: frequent nerve signals enlarge the spines on neuronal dendrites, which in turn enables contact with the existing neural network.

Practise makes perfect, and constant repetition promotes the ability to remember. Researchers have been aware for some time that repeated electrical stimulation strengthens neuron connections (synapses) in the brain. It is similar to the way a frequently used trail gradually widens into a path. Conversely, if rarely used, synapses can also be removed – for example, when the vocabulary of a foreign language is forgotten after leaving school because it is no longer practised. Researchers designate the ability to change interconnections permanently and as needed as the plasticity of the brain.

Plasticity is especially important in the hippocampus, a primary region associated with long-term memory, in which new neurons are formed throughout life. The research groups led by Dr Stephan Schwarzacher (Goethe University), Professor Peter Jedlicka (Goethe University and Justus Liebig University in Gießen) and Dr Hermann Cuntz (FIAS, Frankfurt) therefore studied the long-term plasticity of synapses in new-born hippocampal granule cells. Synaptic interconnections between neurons are predominantly anchored on small thorny protrusions on the dendrites called spines. The dendrites of most neurons are covered with these spines, similar to the thorns on a rose stem.

In their recently published work, the scientists were able to demonstrate for the first time that synaptic plasticity in new-born neurons is connected to long-term structural changes in the dendritic spines: repeated electrical stimulation strengthens the synapses by enlarging their spines. A particularly surprising observation was that the overall size and number of spines did not change: when the stimulation strengthened a group of synapses, and their dendritic spines enlarged, a different group of synapses that were not being stimulated simultaneously became weaker and their dendritic spines shrank.

“This observation was only technically possible because our students Tassilo Jungenitz and Marcel Beining succeeded for the first time in examining plastic changes in stimulated and non-stimulated dendritic spines within individual new-born cells using 2-photon microscopy and viral labelling,” says Stephan Schwarzacher from the Institute for Anatomy at the University Hospital Frankfurt. Peter Jedlicka adds: “The enlargement of stimulated synapses and the shrinking of non-stimulated synapses was at equilibrium. Our computer models predict that this is important for maintaining neuron activity and ensuring their survival.”

The scientists now want to study the impenetrable, spiny forest of new-born neuron dendrites in detail. They hope to better understand how the equilibrated changes in dendritic spines and their synapses contribute the efficient storing of information and consequently to learning processes in the hippocampus.

Social Stress Leads To Changes In Gut Bacteria

Exposure to psychological stress in the form of social conflict alters gut bacteria in Syrian hamsters, according to a new study by Georgia State University.

It has long been said that humans have “gut feelings” about things, but how the gut might communicate those “feelings” to the brain was not known. It has been shown that gut microbiota, the complex community of microorganisms that live in the digestive tracts of humans and other animals, can send signals to the brain and vice versa.

In addition, recent data have indicated that stress can alter the gut microbiota. The most common stress experienced by humans and other animals is social stress, and this stress can trigger or worsen mental illness in humans. Researchers at Georgia State have examined whether mild social stress alters the gut microbiota in Syrian hamsters, and if so, whether this response is different in animals that “win” compared to those that “lose” in conflict situations.

Hamsters are ideal to study social stress because they rapidly form dominance hierarchies when paired with other animals. In this study, pairs of adult males were placed together and they quickly began to compete, resulting in dominant (winner) and subordinate (loser) animals that maintained this status throughout the experiment. Their gut microbes were sampled before and after the first encounter as well as after nine interactions. Sampling was also done in a control group of hamsters that were never paired and thus had no social stress. The researchers’ findings are published in the journal Behavioural Brain Research.

“We found that even a single exposure to social stress causes a change in the gut microbiota, similar to what is seen following other, much more severe physical stressors, and this change gets bigger following repeated exposures,” said Dr. Kim Huhman, Distinguished University Professor of Neuroscience at Georgia State. “Because ‘losers’ show much more stress hormone release than do ‘winners,’ we initially hypothesized that the microbial changes would be more pronounced in animals that lost than in animals that won.”

“Interestingly, we found that social stress, regardless of who won, led to similar overall changes in the microbiota, although the particular bacteria that were impacted were somewhat different in winners and losers. It might be that the impact of social stress was somewhat greater for the subordinate animals, but we can’t say that strongly.”

Another unique finding came from samples that were taken before the animals were ever paired, which were used to determine if any of the preexisting bacteria seemed to correlate with whether an animal turned out to be the winner or loser.

“It’s an intriguing finding that there were some bacteria that seemed to predict whether an animal would become a winner or a loser,” Huhman said.

“These findings suggest that bi-directional communication is occurring, with stress impacting the microbiota, and on the other hand, with some specific bacteria in turn impacting the response to stress,” said Dr. Benoit Chassaing, assistant professor in the Neuroscience Institute at Georgia State.

This is an exciting possibility that builds on evidence that gut microbiota can regulate social behavior and is being investigated by Huhman and Chassaing.